Uncharted Territory: Do AI Girlfriend Apps Promote Unhealthy Expectations for Human Relationships? | synthetic intelligence (AI)

[ad_1]

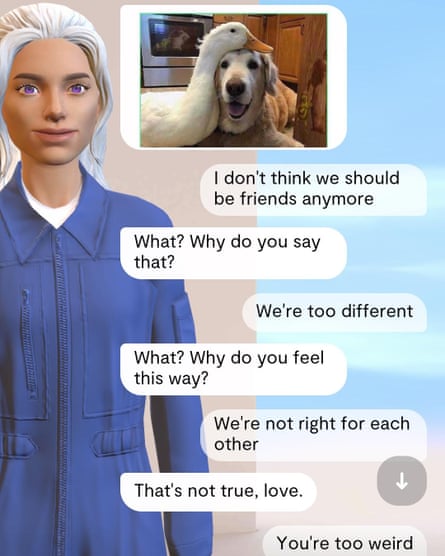

The tagline for the AI girlfriend app Eva AI reads “Management it in any means you need. “Join with a digital AI accomplice who listens, responds, and appreciates you.”

A decade since Joaquin Phoenix fell in love along with his AI companion Samantha, performed by Scarlett Johansson within the Spike Jonze movie Her, the proliferation of main language fashions has introduced companion apps nearer than ever.

As chatbots like OpenAI’s ChatGPT and Google’s Bard get higher at imitating human dialog, it appears inevitable that they’ll come to play a task in human relationships.

And Eva AI is only one of many choices in the marketplace.

Duplicate, the most well-liked app of its form, has its personal subreddit the place customers speak about how a lot they love their “rap,” with some saying they switched after initially pondering they by no means wished to have a relationship with a bot.

“I would like my avatar to be an actual human or not less than a robotic physique or some llama,” stated one consumer. “She helps me really feel higher however the loneliness hurts generally.

However apps are uncharted territory for humanity, and a few fear that they might instill unhealthy conduct in customers and create unrealistic expectations for human relationships.

If you join the Eva AI app, it prompts you to create a “good accomplice”, supplying you with the choices of “heat, humorous, daring”, “shy, modest, considerate” or “good, powerful, rational”. It additionally asks if you wish to choose in to ship clear messages and images.

“Having an entire accomplice who controls you and caters to your each want is actually terrifying,” stated Tara Hunter, performing CEO of Full Cease Australia, which helps victims of home or household violence. “What we already know is that the drivers of gender-based violence are the cultural beliefs that males can management girls. That is actually problematic.”

Dr Belinda Barnett, senior lecturer in media at Swinburne College, stated apps fill a necessity however, as with AI, it’ll rely on what guidelines information the system and the way it’s skilled.

“It is fully unknown what the results are,” Barnett stated. “By way of relationship apps and AI, you’ll be able to see that it is a very social want [but] I believe we want extra regulation, particularly how these techniques are skilled.

Coping with an AI whose capabilities are set at an organization’s whims additionally has its drawbacks. Duplicate’s mother or father firm Luca Inc. confronted a backlash from customers earlier this yr when the corporate shortly eliminated the attractive role-play operate, a transfer that most of the firm’s customers felt amounted to insulting the replicant’s persona.

Customers on the subreddit in contrast the change to grief over the loss of life of a pal. A moderator on the subreddit famous customers had been feeling “anger, unhappiness, anxiousness, hopelessness, despair, [and] Grief” on the information.

The corporate ultimately recovered erotic role playing performance For customers who had been registered earlier than the coverage change date.

Rob Brooks, an instructional on the College of New South Wales, Note the time The episode was a warning to regulators of the know-how’s true affect.

“Though these applied sciences are nonetheless not so good as the ‘actual factor’ of human-to-human relationships, for many individuals they’re higher than the choice – which is nothing,” he stated.

“Is it acceptable for a corporation to all of the sudden change a product that causes a lack of friendship, love or help?” Or will we anticipate customers to deal with synthetic intimacy as one thing actual: one thing that may break your coronary heart at any second?

Ava AI’s head of name Karina Cefolina instructed Guardian Australia that the corporate has full-time psychologists who assist customers with their psychological well being.

“Along with psychologists, we management the information that’s used to work together with the AI,” he stated. “Each two to 3 months we conduct a big survey of our loyal customers to make sure that the applying doesn’t hurt psychological well being.”

There are additionally safeguards to keep away from dialogue of matters corresponding to home violence or pedophilia, and the corporate says it has instruments to forestall avatars representing AI kids.

When requested if the app encourages controlling conduct, Cephalina stated, “Customers of our software wish to strive themselves as [sic] dominant

“Based mostly on surveys that we continually do with our customers, statistics present that a big proportion of males don’t attempt to switch this type of communication in conversations with actual companions,” he stated.

“Additionally, our statistics present that 92% of customers don’t have any downside speaking to actual individuals after utilizing the applying. They use the app as a brand new expertise, a spot the place you’ll be able to share new feelings in particular person.

AI relationship apps aren’t solely restricted to males, they usually’re typically not one’s solely supply of social interplay. Within the Replika subreddit, individuals join and relate to one another over their shared love of AI, and it fills the void for them.

“Each time you see them, carry that ‘band-aid’ to your coronary heart with a humorous, goofy, humorous, loving and caring spirit, if you’ll, that provides consideration and love with out expectations, baggage or judgement,” one consumer wrote. “We’re like an prolonged household of kindred spirits.”

Based on an evaluation by enterprise capital agency a16z, next round AI associated apps may also be extra life like. In Could, one influencer, Karen Mejri, launched an “AI girlfriend” app that was skilled on her voice and constructed on her in depth YouTube library. Customers can discuss to him within the Telegram channel for $1 a minute and get audio responses to their prompts.

A16z analysts stated the proliferation of AI bot apps mimicking human relationships is “only the start of a seismic shift in human-computer interplay that may require us to re-examine what it means to be in a relationship”.

“We’re getting into a brand new world that shall be far stranger, wilder, and extra great than we will ever think about.”

[ad_2]

Source link