[ad_1]

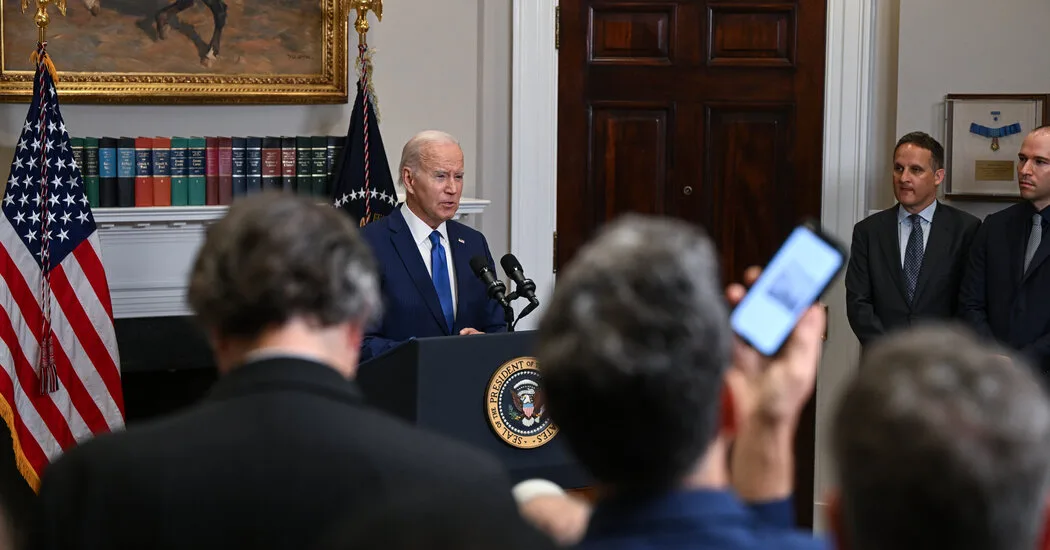

This week, the White Home announced that it had acquired “voluntary dedication” from seven main AI corporations to handle the dangers posed by synthetic intelligence.

Getting the businesses – Amazon, Anthropic, Google, Inflection, Meta, Microsoft and OpenAI – to agree on something is a step ahead. They embrace bitter rivals with refined however vital variations within the methods they’re approaching AI analysis and improvement.

Meta, for instance, could be very eager to get its AI fashions into the arms of builders Many of them are open source, put their code out within the open for anybody to make use of. Different labs, similar to Anthropic, have taken over more careful By the way in which, releasing their expertise in additional restricted methods.

However what do these guarantees actually imply? And are they prone to change a lot about how AI corporations function, on condition that they don’t seem to be backed by the drive of legislation?

Given the potential stack of AI guidelines, the main points matter. So let’s take a better take a look at what’s being agreed upon right here and the potential affect.

Dedication 1: Corporations decide to conducting inside and exterior safety testing of their AI methods earlier than they’re launched.

Every of those AI corporations already performs safety testing — usually known as “red-teaming” — earlier than releasing its fashions. On one stage, this isn’t actually a brand new dedication. And it’s a obscure promise. It would not go into many particulars about what sort of testing is required, or who will do the testing.

within the A statement with promisesthe White Home stated solely that testing of AI fashions “will probably be performed partly by unbiased consultants” and can concentrate on AI threats “similar to biosecurity and cybersecurity, in addition to its broader societal implications.”

It is a good suggestion to get AI corporations to publicly proceed this type of testing, and encourage extra transparency within the testing course of. And there are some sorts of AI dangers — similar to the danger that AI fashions might be used as bioweapons — that authorities and army officers are in all probability higher outfitted to evaluate than corporations.

I might prefer to see the AI business agree on an ordinary battery of safety checks, such because the “autonomous replication” checks Alignment Research Center Runs on fashions beforehand launched by OpenAI and Anthropic. I might additionally prefer to see the federal authorities fund these kinds of checks, which will be costly and require engineers with vital technical experience. Presently, many security checks are financed and monitored by corporations, which raises questions of apparent battle of curiosity.

Dedication 2: Corporations decide to sharing data on managing AI dangers throughout business and with governments, civil society and academia.

This dedication can also be a bit obscure. Many of those corporations are already publishing details about their AI fashions – normally in tutorial papers or company weblog posts. A few of them, together with OpenAI and Anthropic, additionally publish paperwork known as “system playing cards” that describe the steps they’ve taken to make their fashions safe.

However they’ve withheld data once in a while citing safety considerations. When OpenAI launched its newest AI mannequin, GPT-4, this 12 months, it Violation of industry norms and selected to not disclose how a lot information it was skilled on, or how giant the mannequin was (a metric often called a “parameter”). It stated it declined to launch the data due to considerations about competitors and safety. It additionally occurs to be the kind of information that expertise corporations favor to stay away from rivals.

Beneath these new commitments, will AI corporations be pressured to make that type of data public? What if doing so dangers accelerating the AI arms race?

I think the White Home’s objective is much less about forcing corporations to reveal their parameter counts and extra about encouraging them to commerce data with one another in regards to the dangers their fashions do (or do not).

However sharing that type of data will be harmful. If Google’s AI staff prevents a brand new mannequin from getting used to engineer a lifeless bioweapon throughout pre-testing, ought to that data be shared outdoors of Google? Will this danger give dangerous actors an thought of how they will get a much less protected mannequin to carry out the identical process?

Dedication 3: Corporations decide to investing in cybersecurity and insider risk safety to guard proprietary and unreleased mannequin weights.

This one is fairly easy, and uncontroversial amongst AI insiders I’ve talked to. “Mannequin weights” is a technical time period for the mathematical directions that allow AI fashions to operate. The weights are those you wish to steal in case you are an agent of a overseas authorities (or a rival company) that builds its personal model of your ChatGPT or different AI product. And that is one thing AI corporations are interested by retaining firmly in management.

There have already been well-publicized points with mannequin weight traces. The weights for Meta’s unique LLaMA language mannequin, for instance, have been Leaked on 4chan and different web sites simply days after the mannequin was publicly launched. Given the dangers of additional leaks — and the curiosity that different nations might need in stealing this expertise from American corporations — it looks like a no brainer for AI corporations to take a position extra in their very own safety.

Dedication 4: Corporations decide to facilitating third-party discovery and reporting of threats of their AI methods.

I am unsure what he means. Each AI firm has discovered vulnerabilities in its fashions after releasing them, normally as a result of customers attempt to do dangerous issues with the fashions or break their guards (often called “jailbreaking”) in methods the businesses did not have already got.

The White Home’s dedication requires corporations to determine “sturdy reporting mechanisms” for these dangers, however it’s unclear what that may imply. An in-app suggestions button, just like those that permit Fb and Twitter customers to report posts that violate the principles? A Massive Bounty Program, one in every of a sort OpenAI launched this year To reward customers who discover flaws in its system? the rest? We’ve got to attend for extra particulars.

Dedication 5: Corporations decide to growing robust technical mechanisms to let customers know when content material is AI-generated, similar to watermarking methods.

That is an fascinating thought however leaves a number of room for interpretation. Till now, AI corporations have struggled to create instruments that permit individuals to inform whether or not or not they’re viewing AI-generated content material. There are good technical causes for this, however it’s an actual downside when individuals can tackle AI-generated work. (Ask any highschool trainer.) And lots of instruments are at present being developed to have the ability to detect AI output. Can’t really do that with any diploma of accuracy.

I’m not optimistic that this downside is totally fixable. However I’m joyful that corporations are promising to work on it.

Dedication 6: Corporations decide to publicly reporting the capabilities, limitations, and areas of acceptable and inappropriate use of their AI methods.

One other wise sounding pledge, with loads of wiggle room. How usually will corporations have to report on the capabilities and limitations of their methods? How detailed ought to that data be? And on condition that many corporations constructing AI methods are shocked by the capabilities of their very own methods after the actual fact, how a lot can they actually be anticipated to explain up entrance?

Dedication 7: Corporations decide to prioritizing analysis on social dangers that AI methods can create, together with avoiding dangerous bias and discrimination and defending privateness.

Committing to “prioritize analysis” is about as obscure a dedication because it will get. Nonetheless, I am positive this dedication will probably be embraced by many within the AI ethics crowd, who need AI corporations to prioritize stopping near-term harms similar to bias and discrimination over worrying about doomsday eventualities, as AI security individuals do.

In case you’re confused by the distinction between “AI ethics” and “AI security,” simply know that there are two warring factions throughout the AI analysis neighborhood, every of which thinks the opposite’s focus is on stopping the incorrect type of hurt.

Dedication 8: Corporations decide to growing and deploying superior AI methods to assist deal with society’s biggest challenges.

I do not suppose many individuals would argue that superior AI ought to No It needs to be used to assist sort out society’s greatest challenges. The White Home lists “most cancers prevention” and “mitigating local weather change” as two areas the place it might like AI corporations to focus their efforts, and it might disagree with me there.

What makes this objective considerably sophisticated, nevertheless, is that in AI analysis, what begins out as a trivial commentary usually results in extra critical implications. Among the expertise that went into DeepMind’s AlphaGo – an AI system that was skilled to play the board sport Go. proved useful In predicting the three-dimensional construction of proteins, a significant discovery that spurred primary scientific analysis.

General, the White Home’s dealings with AI corporations appear extra symbolic than substantive. There isn’t any enforcement mechanism to make sure that corporations observe via on these guarantees, and lots of of them replicate the precautions that AI corporations are already taking.

Nonetheless, it is a affordable first step. And agreeing to observe these guidelines exhibits that AI corporations have realized from the failures of earlier tech corporations, which waited to have interaction with the federal government till they bought into bother. In Washington, at the very least the place technical rules are involved, it pays to reveal shortly.

[ad_2]

Source link